Benchmarking Multiphase Monte Carlo Sampling#

Motivation#

As a methods researcher one is often interested in testing new methods and benchmarking them together with state-of-the-art algorithms. hopsy provides the framework for conducting such comparisons, because it allows the methods researcher to focus on the algorithms, while the scaffolding, i.e., I/O, convergence diagnostics, and state-of-the-art implementations of existing algorithms are provided.

We show in this notebook how one can adapt build a multiphase monte carlo sampling plugin for hopsy

Preimplemented Multiphase sampling#

The multiphase Monte Carlo algorithm is also available preimplemented in hopsy, see MultiphaseMonteCarlo.ipynb for a short example

Background#

Instead of rounding before sampling, multiphase monte carlo sampling rounds adaptively based on the samples of the Markov chain during a run. It has been reported to be an efficient strategy, if the underlying Markov chain is efficient enough.

The Idea has found previous adaption in the artificially centered coordinate hit-and-run and the optGP sampler. However, Coordinate Hit-and-Run Rounded showed greater and more stable performance (https://pubmed.ncbi.nlm.nih.gov/28158334/) than both of those algorithms.

The idea is resurrected and shown to be efficient given a better Markov chain implementation. See https://drops.dagstuhl.de/opus/volltexte/2021/13820/pdf/LIPIcs-SoCG-2021-21.pdf

[1]:

import hopsy

from matplotlib import pyplot as plt

import numpy as np

from typing import List

import time

import PolyRound

Custom proposals#

In case the proposal you want to benchmark is not already implemented, it is possible to implement it yourself in a small class. It is then possible to seamlessy integrate this proposal into existing workflows with hopsy. As an example, we implement Overrelaxed Hit-and-Run, see https://ui.adsabs.harvard.edu/abs/2015IJMPC..2650010D/abstract

[2]:

class OverrelaxedHitAndRunProposal:

def __init__(

self, problem: hopsy.Problem, starting_point: np.ndarray, epsilon: float = 1e-10

):

self.A = problem.A

self.b = problem.b

self.state = starting_point

self.proposal = self.state

self.epsilon = epsilon

def propose(self, rng) -> np.ndarray:

"""This method is called by hopsy.MarkovChain. Returns the proposal"""

direction = np.random.randn(self.state.shape[0])

direction /= np.linalg.norm(direction, axis=0)

inverse_distances = np.nan_to_num(

np.divide(self.A @ direction, self.b - self.A @ self.state)

)

forward_distance = 1.0 / np.max(inverse_distances)

backward_distance = 1.0 / np.min(inverse_distances)

L = forward_distance - backward_distance

a = 2 * (-backward_distance) / L - 1

if a == 0:

a = self.epsilon

t_1 = (

L * (1 + a - np.sqrt((1 + a) ** 2 - 4 * a * np.random.uniform())) / (2 * a)

)

t_0 = -backward_distance

self.proposal = self.state + (t_1 - t_0) * direction

return self.proposal

More complex custom sampling schemes#

In addition to custom proposals, it is also possible to implement adaptive workflows. In this case we implement the adaptive rounding from https://drops.dagstuhl.de/opus/volltexte/2021/13820/pdf/LIPIcs-SoCG-2021-21.pdf using the Billiard walk that is already implemented in hopsy.

[3]:

# rounding based on samples, as suggested in https://drops.dagstuhl.de/opus/volltexte/2021/13820/pdf/LIPIcs-SoCG-2021-21.pdf

def svd_rounding(samples, polytope):

# We concatenate them samples to [n_dim, n_iterations] for rounding

stacked_samples = np.vstack(

(samples[0, :, :], samples[1, :, :], samples[2, :, :], samples[3, :, :])

)

mean = np.mean(stacked_samples, axis=0)

stacked_samples = stacked_samples - mean

U, S, Vh = np.linalg.svd(stacked_samples)

# Rescaling as mentioned in https://drops.dagstuhl.de/opus/volltexte/2021/13820/pdf/LIPIcs-SoCG-2021-21.pdf

s_ratio = np.max(S) / np.min(S)

S = S / np.min(S)

if np.max(S) >= 2.0:

S[np.where(S < 2.0)] = 1.0

else:

S = np.ones(S.shape)

Vh = np.identity(Vh.shape[0])

rounding_matrix = np.transpose(Vh).dot(np.diag(S))

# Transforms current last samples into new polytope

sub_problem = hopsy.Problem(

polytope.A, polytope.b, transformation=rounding_matrix, shift=mean

)

starting_points = hopsy.transform(

sub_problem, [samples[i, -1, :] for i in range(samples.shape[0])]

)

polytope.apply_shift(mean)

polytope.apply_transformation(rounding_matrix)

return s_ratio, starting_points, polytope, sub_problem

Helper functions#

In this section we implement some helper functions for executing the benchmarks as well as a function which constructs our benchmark problems

[4]:

# Helper functions for calling the sampling algorithms and defining the polytopes

def run_multiphase_sampling(

proposal,

polytope,

seeds: List,

target_ess: float,

steps_per_phase: int,

starting_points: List,

):

limit_singular_ratio_value = 2.3 # from https://drops.dagstuhl.de/opus/volltexte/2021/13820/pdf/LIPIcs-SoCG-2021-21.pdf

assert len(starting_points) == len(seeds)

rngs = [hopsy.RandomNumberGenerator(s) for s in seeds]

ess = 0

iterations = 0

s_ratio = limit_singular_ratio_value + 1

sampling_time = 0

samples = None

last_iteration_did_rounding = True

while ess < target_ess:

iterations += 1

print("\t\titer:", iterations)

internal_polytope = polytope

p = hopsy.Problem(internal_polytope.A, internal_polytope.b)

markov_chains = [

hopsy.MarkovChain(proposal=proposal, problem=p, starting_point=s)

for s in starting_points

]

start = time.time()

acceptance_rate, _samples = hopsy.sample(

markov_chains, rngs, n_samples=steps_per_phase, thinning=1, n_procs=1

)

end = time.time()

assert 0 < np.min(p.b - p.A @ _samples[0, -1, :])

assert 0 < np.min(p.b - p.A @ _samples[1, -1, :])

assert 0 < np.min(p.b - p.A @ _samples[2, -1, :])

assert 0 < np.min(p.b - p.A @ _samples[3, -1, :])

sampling_time += end - start

if s_ratio > limit_singular_ratio_value:

samples = _samples

# also measures the rounding time

start = time.time()

s_ratio, starting_points, internal_polytope, sub_problem = svd_rounding(

samples, internal_polytope

)

end = time.time()

sampling_time += end - start

print("\t\ts_ratio:", s_ratio)

last_iteration_did_rounding = True

else:

if last_iteration_did_rounding:

# next operation transforms last samples to current space before concatenating

for j in range(samples.shape[0]):

samples[j] = hopsy.transform(

sub_problem, [samples[j, i, :] for i in range(samples.shape[1])]

)

last_iteration_did_rounding = False

samples = np.concatenate((samples, _samples), axis=1)

starting_points = [samples[i, -1, :] for i in range(samples.shape[0])]

ess = np.min(hopsy.ess(samples))

print("\t\tess", str(ess))

steps_per_phase += 100

# transforms back to full space

_samples = np.zeros(

(samples.shape[0], samples.shape[1], polytope.transformation.shape[0])

)

for j in range(samples.shape[0]):

_samples[j] = hopsy.back_transform(

hopsy.Problem(

A=internal_polytope.A,

b=internal_polytope.b,

transformation=internal_polytope.transformation,

shift=internal_polytope.shift,

),

[samples[j, i, :] for i in range(samples.shape[1])],

)

return _samples, iterations, ess, sampling_time

def run_sampling(

proposal,

polytope,

seeds: List,

target_ess: float,

steps_per_phase: int,

starting_points: List,

thinning=None,

):

rngs = [hopsy.RandomNumberGenerator(s) for s in seeds]

problem = hopsy.Problem(polytope.A, polytope.b)

thinning = (

thinning if thinning is not None else int(float(problem.A.shape[1] ** 2) / 6)

)

ess = 0

iterations = 0

# also measures the rounding time

start = time.time()

rounded_problem = hopsy.round(problem)

# transforms starting points into rounded space

starting_points = hopsy.transform(rounded_problem, starting_points)

end = time.time()

sampling_time = end - start

samples = None

markov_chains = [

hopsy.MarkovChain(problem=rounded_problem, starting_point=s)

for s in starting_points

]

while ess < target_ess:

iterations += 1

print("\t\titer:", iterations)

for i in range(len(starting_points)):

markov_chains[i].proposal = proposal(

rounded_problem, starting_point=starting_points[i]

)

start = time.time()

acceptance_rate, _samples = hopsy.sample(

markov_chains, rngs, n_samples=steps_per_phase, thinning=thinning, n_procs=1

)

end = time.time()

sampling_time += end - start

samples = (

_samples if samples is None else np.concatenate((samples, _samples), axis=1)

)

starting_points = hopsy.transform(rounded_problem, samples[:, -1, :])

ess = np.min(hopsy.ess(samples))

print("\t\tess", str(ess) + ",", "samples", samples.shape[1])

# transforms back to full space

_samples = np.zeros(

(samples.shape[0], samples.shape[1], polytope.transformation.shape[0])

)

for j in range(samples.shape[0]):

_samples[j] = hopsy.back_transform(

hopsy.Problem(

A=polytope.A,

b=polytope.b,

transformation=polytope.transformation,

shift=polytope.shift,

),

[samples[j, i, :] for i in range(samples.shape[1])],

)

return _samples, iterations, ess, sampling_time

def generate_polytope(name):

if name == "BP5":

bp = hopsy.BirkhoffPolytope(5)

polytope = PolyRound.mutable_classes.polytope.Polytope(A=bp.A, b=bp.b)

polytope.normalize()

parameter_names = ["x" + str(c) for c in polytope.A.columns]

return polytope, "5D Birkhoff Polytope", parameter_names

elif name == "e_coli":

original_polytope = PolyRound.static_classes.parse_sbml_stoichiometry.StoichiometryParser.parse_sbml_cobrapy(

"../extern/hops/resources/e_coli_core/e_coli_core.xml"

)

parameter_names = original_polytope.A.columns

polytope = PolyRound.api.PolyRoundApi.simplify_polytope(original_polytope)

polytope = PolyRound.api.PolyRoundApi.transform_polytope(polytope)

polytope.normalize()

return polytope, "e_coli_core", parameter_names

Benchmark parameters#

Next set up the benchmark parameters

[5]:

target_ess = 1000

proposalTypes = {

"Billiard walk": hopsy.BilliardWalkProposal,

"CHRRT": hopsy.UniformCoordinateHitAndRunProposal,

"OHRR": OverrelaxedHitAndRunProposal,

}

seeds = [1, 2, 3, 4]

problems_to_benchmark = ["BP5", "e_coli"]

Benchmarking#

Sample all problems with all sampling schemes, i.e., two for loops.

[6]:

samples = {}

iterations = {}

ess = {}

times = {}

ess_t = {}

parameter_names = {}

for problem_selection in problems_to_benchmark:

print("Benchmarking {problem_selection}")

samples[problem_selection] = {}

iterations[problem_selection] = {}

ess[problem_selection] = {}

times[problem_selection] = {}

ess_t[problem_selection] = {}

for name, p in proposalTypes.items():

print("\tproposal", name)

# resets problem and starting points

preprocessed_polytope, problem_name, parameter_names[problem_selection] = generate_polytope(

problem_selection

)

steps_per_phase = preprocessed_polytope.A.shape[1] * 20

cheby = hopsy.compute_chebyshev_center(

hopsy.Problem(A=preprocessed_polytope.A, b=preprocessed_polytope.b)

).flatten()

starting_points = [cheby for s in seeds]

if name == "Billiard walk":

(

samples[problem_selection][name],

iterations[problem_selection][name],

ess[problem_selection][name],

times[problem_selection][name],

) = run_multiphase_sampling(

proposal=p,

polytope=preprocessed_polytope,

seeds=seeds,

target_ess=target_ess,

steps_per_phase=steps_per_phase,

starting_points=starting_points,

)

else:

thinning = (

1

if name != "CHRRT"

else max(int(float(preprocessed_polytope.A.shape[1] ** 2) / 6), 1)

)

samples[problem_selection][name], iterations[problem_selection][name], ess[problem_selection][name], times[problem_selection][name] = run_sampling(

proposal=p,

polytope=preprocessed_polytope,

seeds=seeds,

target_ess=target_ess,

steps_per_phase=steps_per_phase,

starting_points=starting_points,

thinning=thinning,

)

ess_t[problem_selection][name] = ess[problem_selection][name] / times[problem_selection][name]

# check convergence

for name, s in samples[problem_selection].items():

print("\t" + name, "rhat:", np.max(hopsy.rhat(s)))

print("\tess", ess[problem_selection])

print("\ttimes", times[problem_selection])

print("\tess/t", ess_t[problem_selection])

Benchmarking {problem_selection}

proposal Billiard walk

Set parameter Username

Academic license - for non-commercial use only - expires 2023-11-30

iter: 1

s_ratio: 1.3847452299003395

ess 231.4835868364245

iter: 2

ess 627.6793244654275

iter: 3

ess 990.5612889618237

iter: 4

ess 1583.2881720652492

proposal CHRRT

iter: 1

ess 447.64227280442987, samples 320

iter: 2

ess 863.8258778056024, samples 640

iter: 3

ess 1324.526979068286, samples 960

proposal OHRR

iter: 1

ess 7.066940654698069, samples 320

iter: 2

ess 8.978791384060571, samples 640

iter: 3

ess 10.822526600431221, samples 960

iter: 4

ess 14.644470085574461, samples 1280

iter: 5

ess 18.35148293619599, samples 1600

iter: 6

ess 16.62222639251434, samples 1920

iter: 7

ess 23.833699742670035, samples 2240

iter: 8

ess 41.20876776311011, samples 2560

iter: 9

ess 57.886912910344414, samples 2880

iter: 10

ess 37.382289849801055, samples 3200

iter: 11

ess 62.344564915244966, samples 3520

iter: 12

ess 79.15850821798082, samples 3840

iter: 13

ess 74.36071172518442, samples 4160

iter: 14

ess 62.95342031751828, samples 4480

iter: 15

ess 113.47926517779857, samples 4800

iter: 16

ess 121.72186371924973, samples 5120

iter: 17

ess 113.3492478009126, samples 5440

iter: 18

ess 127.77424100111836, samples 5760

iter: 19

ess 136.9696137423155, samples 6080

iter: 20

ess 144.16940525794976, samples 6400

iter: 21

ess 139.2192539731686, samples 6720

iter: 22

ess 156.53500273176687, samples 7040

iter: 23

ess 175.14217193906293, samples 7360

iter: 24

ess 182.43273042583925, samples 7680

iter: 25

ess 191.5815328961723, samples 8000

iter: 26

ess 210.41931304680313, samples 8320

iter: 27

ess 224.31970085590208, samples 8640

iter: 28

ess 226.62768566905982, samples 8960

iter: 29

ess 237.75902910720876, samples 9280

iter: 30

ess 243.67493216855976, samples 9600

iter: 31

ess 257.3021980390406, samples 9920

iter: 32

ess 273.8199561717506, samples 10240

iter: 33

ess 294.20112361746465, samples 10560

iter: 34

ess 293.48158227555297, samples 10880

iter: 35

ess 300.45975910082853, samples 11200

iter: 36

ess 317.7535136265973, samples 11520

iter: 37

ess 336.3692862259476, samples 11840

iter: 38

ess 331.9082473463359, samples 12160

iter: 39

ess 346.2916329510095, samples 12480

iter: 40

ess 357.96934334556954, samples 12800

iter: 41

ess 363.54227418015665, samples 13120

iter: 42

ess 383.5858067647125, samples 13440

iter: 43

ess 382.0381927501071, samples 13760

iter: 44

ess 401.5143488947238, samples 14080

iter: 45

ess 420.64939968763684, samples 14400

iter: 46

ess 449.08326901564647, samples 14720

iter: 47

ess 452.80046753169904, samples 15040

iter: 48

ess 450.12839321675506, samples 15360

iter: 49

ess 462.2771927927099, samples 15680

iter: 50

ess 461.9902539847381, samples 16000

iter: 51

ess 481.53977928686703, samples 16320

iter: 52

ess 508.6649066292897, samples 16640

iter: 53

ess 504.20807661962795, samples 16960

iter: 54

ess 521.5147013905246, samples 17280

iter: 55

ess 544.7993417778594, samples 17600

iter: 56

ess 535.5840942302711, samples 17920

iter: 57

ess 551.2635026918439, samples 18240

iter: 58

ess 569.2310737012456, samples 18560

iter: 59

ess 588.0058452604753, samples 18880

iter: 60

ess 593.8488910778029, samples 19200

iter: 61

ess 615.6596139884347, samples 19520

iter: 62

ess 635.4306913517988, samples 19840

iter: 63

ess 646.0405907460345, samples 20160

iter: 64

ess 644.4425753035002, samples 20480

iter: 65

ess 646.8043239236227, samples 20800

iter: 66

ess 664.2919147742286, samples 21120

iter: 67

ess 674.3680356181259, samples 21440

iter: 68

ess 680.3837001518382, samples 21760

iter: 69

ess 698.4208197353897, samples 22080

iter: 70

ess 709.8115678575414, samples 22400

iter: 71

ess 722.2190007599912, samples 22720

iter: 72

ess 729.2378725295657, samples 23040

iter: 73

ess 749.2232824472641, samples 23360

iter: 74

ess 750.6074062950678, samples 23680

iter: 75

ess 779.3189674698466, samples 24000

iter: 76

ess 793.5895044612517, samples 24320

iter: 77

ess 788.1310770304416, samples 24640

iter: 78

ess 813.4174519025988, samples 24960

iter: 79

ess 818.7102630963842, samples 25280

iter: 80

ess 825.1911302157964, samples 25600

iter: 81

ess 824.4797274295319, samples 25920

iter: 82

ess 832.4798666785565, samples 26240

iter: 83

ess 834.0921425432099, samples 26560

iter: 84

ess 856.1355113591557, samples 26880

iter: 85

ess 854.4998018509373, samples 27200

iter: 86

ess 870.6301466142817, samples 27520

iter: 87

ess 875.9356221946373, samples 27840

iter: 88

ess 891.1700748102612, samples 28160

iter: 89

ess 903.7960059093062, samples 28480

iter: 90

ess 886.178835961187, samples 28800

iter: 91

ess 867.117458057039, samples 29120

iter: 92

ess 881.9490684846811, samples 29440

iter: 93

ess 890.5241990028626, samples 29760

iter: 94

ess 913.5607895586063, samples 30080

iter: 95

ess 932.2398882121701, samples 30400

iter: 96

ess 951.138292074147, samples 30720

iter: 97

ess 964.61299347187, samples 31040

iter: 98

ess 983.6391765927045, samples 31360

iter: 99

ess 979.9236321935331, samples 31680

iter: 100

ess 1003.9031482727333, samples 32000

Billiard walk rhat: 1.0061219415504485

CHRRT rhat: 1.006207630257062

OHRR rhat: 1.0070773467273284

ess {'Billiard walk': 1583.2881720652492, 'CHRRT': 1324.526979068286, 'OHRR': 1003.9031482727333}

times {'Billiard walk': 0.07052469253540039, 'CHRRT': 1.0217652320861816, 'OHRR': 6.2099902629852295}

ess/t {'Billiard walk': 22450.12512845066, 'CHRRT': 1296.3124379990331, 'OHRR': 161.65937558010646}

Benchmarking {problem_selection}

proposal Billiard walk

iter: 1

s_ratio: 11.963156850679276

ess 4.872243577042705

iter: 2

s_ratio: 23.96780496221293

ess 5.133013163411473

iter: 3

s_ratio: 33.92514821624308

ess 7.03213882586069

iter: 4

s_ratio: 23.480721222145224

ess 21.114165738738418

iter: 5

s_ratio: 2.3472103720655264

ess 652.8113104108883

iter: 6

s_ratio: 2.1462979782508014

ess 957.7149285139645

iter: 7

ess 2013.7358952432155

proposal CHRRT

iter: 1

ess 524.5375595198544, samples 480

iter: 2

ess 1188.850821488119, samples 960

proposal OHRR

iter: 1

ess 6.269631083291744, samples 480

iter: 2

ess 7.323446416265532, samples 960

iter: 3

ess 8.888495302103292, samples 1440

iter: 4

ess 10.891013536977999, samples 1920

iter: 5

ess 10.43496232034734, samples 2400

iter: 6

ess 13.882887827988935, samples 2880

iter: 7

ess 15.49037801963015, samples 3360

iter: 8

ess 28.348358594863743, samples 3840

iter: 9

ess 33.099764817877734, samples 4320

iter: 10

ess 33.0052664057525, samples 4800

iter: 11

ess 32.11942312187279, samples 5280

iter: 12

ess 31.189888275506522, samples 5760

iter: 13

ess 34.00693166294697, samples 6240

iter: 14

ess 28.89547451072042, samples 6720

iter: 15

ess 38.45378494069976, samples 7200

iter: 16

ess 65.2195031632737, samples 7680

iter: 17

ess 76.91470052686886, samples 8160

iter: 18

ess 80.54628640266824, samples 8640

iter: 19

ess 120.38209736486483, samples 9120

iter: 20

ess 117.68114663878684, samples 9600

iter: 21

ess 124.75070422853102, samples 10080

iter: 22

ess 116.34655760555961, samples 10560

iter: 23

ess 124.18592232344665, samples 11040

iter: 24

ess 123.19323837905226, samples 11520

iter: 25

ess 144.46386173862024, samples 12000

iter: 26

ess 148.09968539854086, samples 12480

iter: 27

ess 147.95311563377672, samples 12960

iter: 28

ess 145.98511902334084, samples 13440

iter: 29

ess 158.51895860514378, samples 13920

iter: 30

ess 169.69689177481715, samples 14400

iter: 31

ess 174.49127005693168, samples 14880

iter: 32

ess 180.42261318891497, samples 15360

iter: 33

ess 192.96616757403754, samples 15840

iter: 34

ess 189.6869567126621, samples 16320

iter: 35

ess 185.9907738397535, samples 16800

iter: 36

ess 192.82170148987922, samples 17280

iter: 37

ess 204.65635395883973, samples 17760

iter: 38

ess 207.05551739174868, samples 18240

iter: 39

ess 216.1649396014175, samples 18720

iter: 40

ess 218.65560668780427, samples 19200

iter: 41

ess 224.67365262048054, samples 19680

iter: 42

ess 233.2337269481389, samples 20160

iter: 43

ess 245.04918297452195, samples 20640

iter: 44

ess 253.35676743095638, samples 21120

iter: 45

ess 268.2333892323007, samples 21600

iter: 46

ess 273.180948810766, samples 22080

iter: 47

ess 272.81001761513676, samples 22560

iter: 48

ess 257.916065225228, samples 23040

iter: 49

ess 253.42112849368127, samples 23520

iter: 50

ess 254.7582764932103, samples 24000

iter: 51

ess 258.4069792053966, samples 24480

iter: 52

ess 273.86342243004873, samples 24960

iter: 53

ess 284.17271357899097, samples 25440

iter: 54

ess 297.20253324691765, samples 25920

iter: 55

ess 302.52499273424024, samples 26400

iter: 56

ess 286.611087547339, samples 26880

iter: 57

ess 280.915655001863, samples 27360

iter: 58

ess 288.3164101913765, samples 27840

iter: 59

ess 300.42292722611387, samples 28320

iter: 60

ess 310.9952497255533, samples 28800

iter: 61

ess 313.1100359628566, samples 29280

iter: 62

ess 289.67804817589433, samples 29760

iter: 63

ess 293.2058222243669, samples 30240

iter: 64

ess 313.40643896165045, samples 30720

iter: 65

ess 293.8918695327726, samples 31200

iter: 66

ess 312.2361613765037, samples 31680

iter: 67

ess 322.9219106586677, samples 32160

iter: 68

ess 323.7545394540001, samples 32640

iter: 69

ess 346.32520571689747, samples 33120

iter: 70

ess 345.85424928547764, samples 33600

iter: 71

ess 358.3048911770299, samples 34080

iter: 72

ess 361.084743896345, samples 34560

iter: 73

ess 365.47086073740763, samples 35040

iter: 74

ess 383.39780160630573, samples 35520

iter: 75

ess 392.63094158894984, samples 36000

iter: 76

ess 398.9072807479651, samples 36480

iter: 77

ess 384.2964391944638, samples 36960

iter: 78

ess 384.7667317498958, samples 37440

iter: 79

ess 391.4770094651921, samples 37920

iter: 80

ess 386.190724216923, samples 38400

iter: 81

ess 382.85858663180676, samples 38880

iter: 82

ess 399.76548583077005, samples 39360

iter: 83

ess 406.45084635182735, samples 39840

iter: 84

ess 414.13634315764835, samples 40320

iter: 85

ess 424.17051913321086, samples 40800

iter: 86

ess 431.3602734254215, samples 41280

iter: 87

ess 422.556096368053, samples 41760

iter: 88

ess 432.7105172995434, samples 42240

iter: 89

ess 442.42073630007786, samples 42720

iter: 90

ess 451.6280904320013, samples 43200

iter: 91

ess 454.8288496773367, samples 43680

iter: 92

ess 459.7049865712104, samples 44160

iter: 93

ess 463.64262501125734, samples 44640

iter: 94

ess 454.3284616055022, samples 45120

iter: 95

ess 458.5231384856301, samples 45600

iter: 96

ess 467.2708211331568, samples 46080

iter: 97

ess 483.29399688389447, samples 46560

iter: 98

ess 489.9114233381726, samples 47040

iter: 99

ess 482.25565920814455, samples 47520

iter: 100

ess 488.76061956249976, samples 48000

iter: 101

ess 501.2216755056666, samples 48480

iter: 102

ess 509.151199607025, samples 48960

iter: 103

ess 512.2207523382951, samples 49440

iter: 104

ess 522.8707944154959, samples 49920

iter: 105

ess 530.9119480393842, samples 50400

iter: 106

ess 534.6566181063171, samples 50880

iter: 107

ess 537.3787114531068, samples 51360

iter: 108

ess 549.4299895513893, samples 51840

iter: 109

ess 545.6206413795908, samples 52320

iter: 110

ess 554.906174875836, samples 52800

iter: 111

ess 573.7119220600656, samples 53280

iter: 112

ess 569.4093051480813, samples 53760

iter: 113

ess 582.5343562401797, samples 54240

iter: 114

ess 592.634209761512, samples 54720

iter: 115

ess 601.744572032569, samples 55200

iter: 116

ess 605.1492755287037, samples 55680

iter: 117

ess 609.6353624312007, samples 56160

iter: 118

ess 613.5433468342083, samples 56640

iter: 119

ess 612.6171354877015, samples 57120

iter: 120

ess 618.69924574814, samples 57600

iter: 121

ess 628.4239828653435, samples 58080

iter: 122

ess 639.0665758373954, samples 58560

iter: 123

ess 640.8951662610747, samples 59040

iter: 124

ess 658.5648101217669, samples 59520

iter: 125

ess 672.2232482053716, samples 60000

iter: 126

ess 692.9192879813564, samples 60480

iter: 127

ess 701.1431658990771, samples 60960

iter: 128

ess 702.7340761365899, samples 61440

iter: 129

ess 707.3541719676651, samples 61920

iter: 130

ess 701.0297752389649, samples 62400

iter: 131

ess 706.8995244779946, samples 62880

iter: 132

ess 701.4908108847167, samples 63360

iter: 133

ess 689.3472197901951, samples 63840

iter: 134

ess 703.0003376779357, samples 64320

iter: 135

ess 708.2335934188095, samples 64800

iter: 136

ess 725.1838266734792, samples 65280

iter: 137

ess 735.3205579025181, samples 65760

iter: 138

ess 737.0318459845154, samples 66240

iter: 139

ess 754.6120288318377, samples 66720

iter: 140

ess 749.599634946338, samples 67200

iter: 141

ess 752.9040639999432, samples 67680

iter: 142

ess 766.2687113077193, samples 68160

iter: 143

ess 781.7276660620391, samples 68640

iter: 144

ess 790.6849726861673, samples 69120

iter: 145

ess 795.7596388427546, samples 69600

iter: 146

ess 799.5584972022341, samples 70080

iter: 147

ess 798.8247767068805, samples 70560

iter: 148

ess 797.1372057741078, samples 71040

iter: 149

ess 794.4879887657187, samples 71520

iter: 150

ess 798.4262537658255, samples 72000

iter: 151

ess 821.3439821865887, samples 72480

iter: 152

ess 836.785381934779, samples 72960

iter: 153

ess 833.192533643803, samples 73440

iter: 154

ess 824.2367310967214, samples 73920

iter: 155

ess 833.027337432633, samples 74400

iter: 156

ess 847.6213170291394, samples 74880

iter: 157

ess 883.55387018975, samples 75360

iter: 158

ess 888.4084908886016, samples 75840

iter: 159

ess 878.3560544642794, samples 76320

iter: 160

ess 867.8099320680594, samples 76800

iter: 161

ess 865.3342989956125, samples 77280

iter: 162

ess 868.7924805999716, samples 77760

iter: 163

ess 879.929282710091, samples 78240

iter: 164

ess 890.2971775291669, samples 78720

iter: 165

ess 906.4353233136444, samples 79200

iter: 166

ess 889.0828926155659, samples 79680

iter: 167

ess 873.9690238366554, samples 80160

iter: 168

ess 877.7212806671587, samples 80640

iter: 169

ess 894.2714684714002, samples 81120

iter: 170

ess 904.8308346920122, samples 81600

iter: 171

ess 912.2855347087361, samples 82080

iter: 172

ess 909.33882150071, samples 82560

iter: 173

ess 897.4864706687231, samples 83040

iter: 174

ess 899.1382480787717, samples 83520

iter: 175

ess 904.4533159265078, samples 84000

iter: 176

ess 910.0445241340408, samples 84480

iter: 177

ess 922.005723922873, samples 84960

iter: 178

ess 928.7932859860817, samples 85440

iter: 179

ess 908.783058613329, samples 85920

iter: 180

ess 895.3797915865479, samples 86400

iter: 181

ess 859.4890207031966, samples 86880

iter: 182

ess 845.2343124097703, samples 87360

iter: 183

ess 839.1122789551176, samples 87840

iter: 184

ess 844.7127039219682, samples 88320

iter: 185

ess 846.3151169334295, samples 88800

iter: 186

ess 842.6175583860781, samples 89280

iter: 187

ess 839.5348864903817, samples 89760

iter: 188

ess 845.649272511435, samples 90240

iter: 189

ess 850.4409680273976, samples 90720

iter: 190

ess 854.5363180594184, samples 91200

iter: 191

ess 864.6976297034453, samples 91680

iter: 192

ess 870.1598273380728, samples 92160

iter: 193

ess 875.8863528631218, samples 92640

iter: 194

ess 880.6299643478253, samples 93120

iter: 195

ess 887.41821530269, samples 93600

iter: 196

ess 893.1249868133013, samples 94080

iter: 197

ess 896.4293587625341, samples 94560

iter: 198

ess 897.194064688584, samples 95040

iter: 199

ess 901.6836008572618, samples 95520

iter: 200

ess 905.9720995421688, samples 96000

iter: 201

ess 915.0415219542131, samples 96480

iter: 202

ess 913.8491612710968, samples 96960

iter: 203

ess 909.5235691609382, samples 97440

iter: 204

ess 901.066821525769, samples 97920

iter: 205

ess 903.6897456054767, samples 98400

iter: 206

ess 905.5696263301422, samples 98880

iter: 207

ess 906.1574968563857, samples 99360

iter: 208

ess 914.8511270258749, samples 99840

iter: 209

ess 919.2598470161788, samples 100320

iter: 210

ess 931.6724515215465, samples 100800

iter: 211

ess 938.2402200850552, samples 101280

iter: 212

ess 943.4875208804775, samples 101760

iter: 213

ess 949.8135147561189, samples 102240

iter: 214

ess 955.7309221867911, samples 102720

iter: 215

ess 970.4592801029347, samples 103200

iter: 216

ess 974.074172962726, samples 103680

iter: 217

ess 979.1099965408358, samples 104160

iter: 218

ess 973.4666570148554, samples 104640

iter: 219

ess 983.7461092409185, samples 105120

iter: 220

ess 988.1624730606339, samples 105600

iter: 221

ess 986.9052574352414, samples 106080

iter: 222

ess 988.65235118233, samples 106560

iter: 223

ess 987.6559013720048, samples 107040

iter: 224

ess 992.0311000409187, samples 107520

iter: 225

ess 984.9431648604796, samples 108000

iter: 226

ess 980.0972109888816, samples 108480

iter: 227

ess 986.1343984234693, samples 108960

iter: 228

ess 986.3988313773265, samples 109440

iter: 229

ess 990.7939674138657, samples 109920

iter: 230

ess 997.9588361482485, samples 110400

iter: 231

ess 1004.1998163525403, samples 110880

Billiard walk rhat: 1.0024606528800506

CHRRT rhat: 1.010601159852741

OHRR rhat: 1.0063622147724012

ess {'Billiard walk': 2013.7358952432155, 'CHRRT': 1188.850821488119, 'OHRR': 1004.1998163525403}

times {'Billiard walk': 1.5347769260406494, 'CHRRT': 0.9749472141265869, 'OHRR': 19.83063769340515}

ess/t {'Billiard walk': 1312.0707387999137, 'CHRRT': 1219.400193428071, 'OHRR': 50.63880606756764}

Visualizing the results#

[7]:

title_fs = 32

label_fs = 20

tick_fs = 16

legend_fs = 16

img_format = "pdf"

[8]:

times_to_plot = {}

ess_t_to_plot = {}

# restructure dicts for easier plotting

for n,p in proposalTypes.items():

times_to_plot[n] = {}

ess_t_to_plot[n] = {}

for problem_selection in problems_to_benchmark:

times_to_plot[n][problem_selection] = times[problem_selection][n]

ess_t_to_plot[n][problem_selection] = ess_t[problem_selection][n]

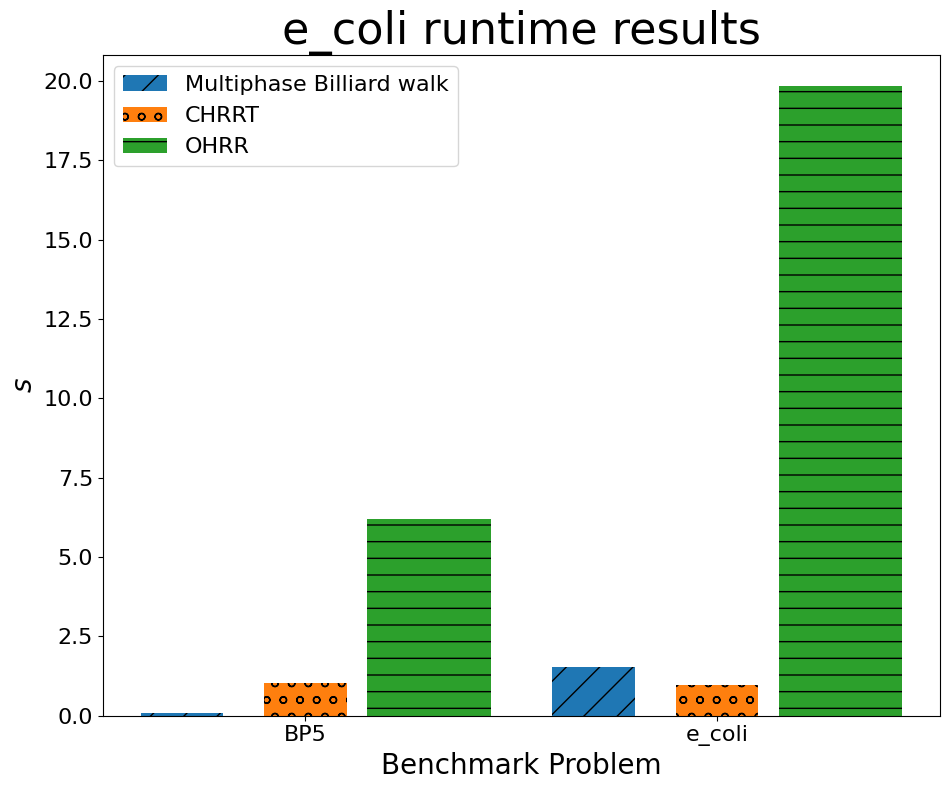

visualize runtimes#

[9]:

plt.figure(figsize=(1.5 * 6.4, 8))

plt.title(problem_selection + " runtime results", fontsize=title_fs)

X_ticks = problems_to_benchmark

X_axis = np.arange(len(X_ticks))

plt.bar(X_axis - 0.3, times_to_plot["Billiard walk"].values(), 0.2, hatch="/", label="Multiphase Billiard walk")

plt.bar(X_axis - 0.0, times_to_plot["CHRRT"].values(), 0.2, hatch="o", label="CHRRT")

plt.bar(X_axis + .3, times_to_plot["OHRR"].values(), 0.3, hatch='-', label='OHRR')

plt.ylabel(r"$s$", fontsize=label_fs)

plt.xlabel(r"Benchmark Problem", fontsize=label_fs)

plt.xticks(X_axis, X_ticks, fontsize=tick_fs)

plt.yticks(fontsize=tick_fs)

plt.tight_layout()

plt.legend(fontsize=legend_fs)

plt.savefig("BenchmarkRuntimeResults.pdf")

plt.show()

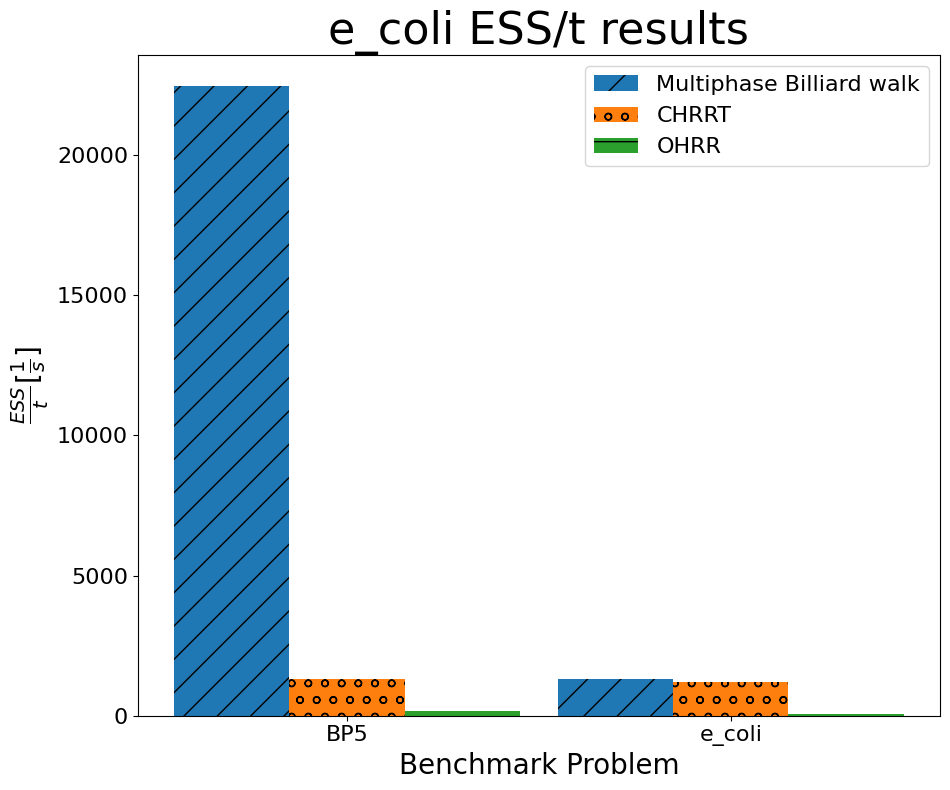

visualize ESS/t [1/s]#

[10]:

plt.figure(figsize=(1.5 * 6.4, 8))

plt.title(problem_selection + " ESS/t results", fontsize=title_fs)

X_ticks = problems_to_benchmark

X_axis = np.arange(len(X_ticks))

plt.bar(X_axis - 0.3, ess_t_to_plot["Billiard walk"].values(), 0.3, hatch="/", label="Multiphase Billiard walk")

plt.bar(X_axis - 0.0, ess_t_to_plot["CHRRT"].values(), 0.3, hatch="o", label="CHRRT")

plt.bar(X_axis + .3, ess_t_to_plot["OHRR"].values(), 0.3, hatch='-', label='OHRR')

plt.ylabel(r"$\frac{\mathit{ESS}}{t} [\frac{1}{s}]$", fontsize=label_fs)

plt.xlabel(r"Benchmark Problem", fontsize=label_fs)

plt.xticks(X_axis, X_ticks, fontsize=tick_fs)

plt.yticks(fontsize=tick_fs)

plt.tight_layout()

plt.legend(fontsize=legend_fs)

plt.savefig("BenchmarkPerformanceResults.pdf")

plt.show()

Conclusion#

In this benchmark the overrelaxed hit and run does not perform well against modern sampling algorithms. Furthermore we can see, that the performance is problem dependendent.

The values of the benchmark are not the main result of this script, however. Much more important is that hopsy is flexible enough to quickly construct fair benchmarks comparing novel algorithms with existing implementations. Furthermore all sampling algorithms access the models (polytopes) via the same interface, which reduces work for the methods researcher.

Appendix#

Visualizing the samples#

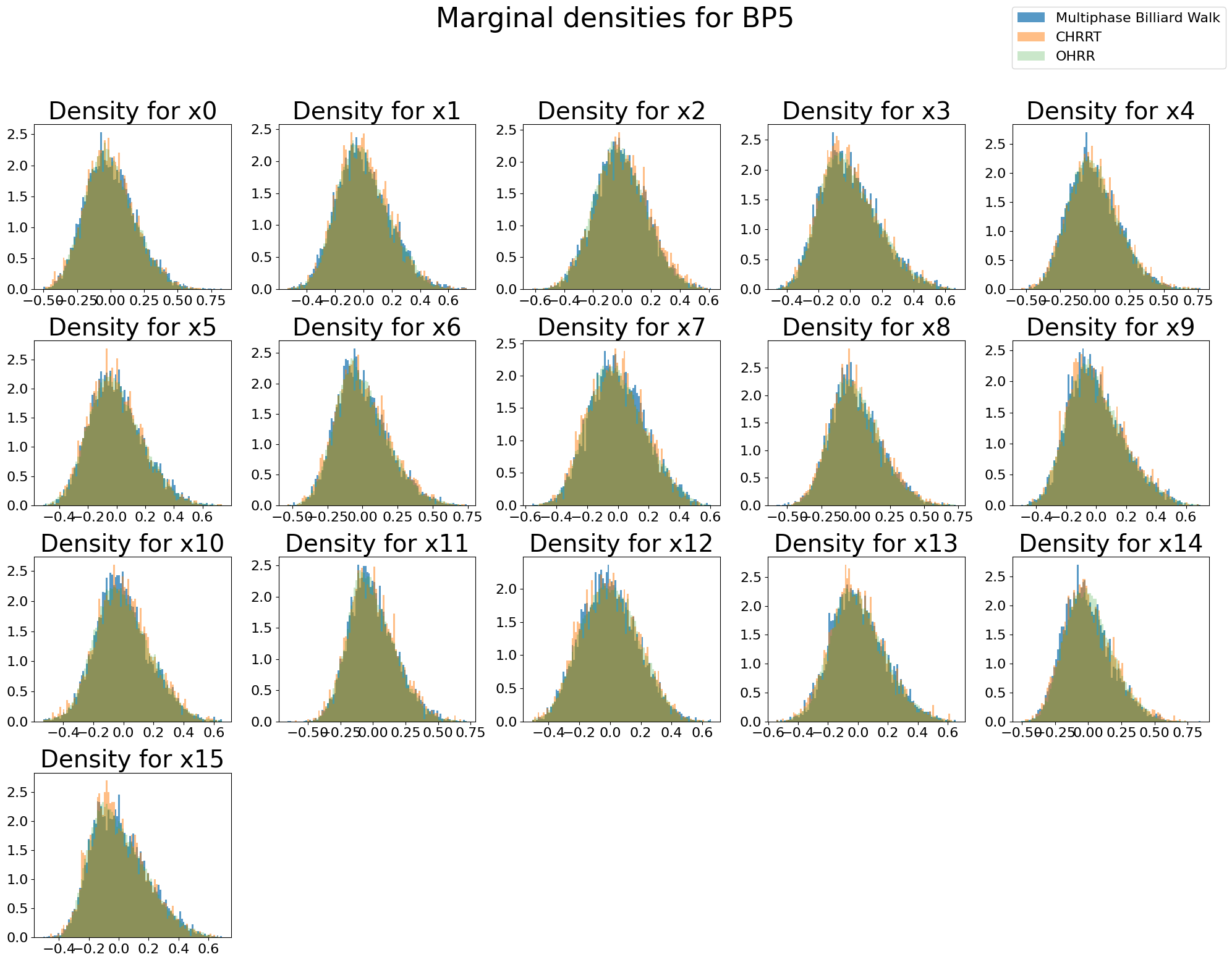

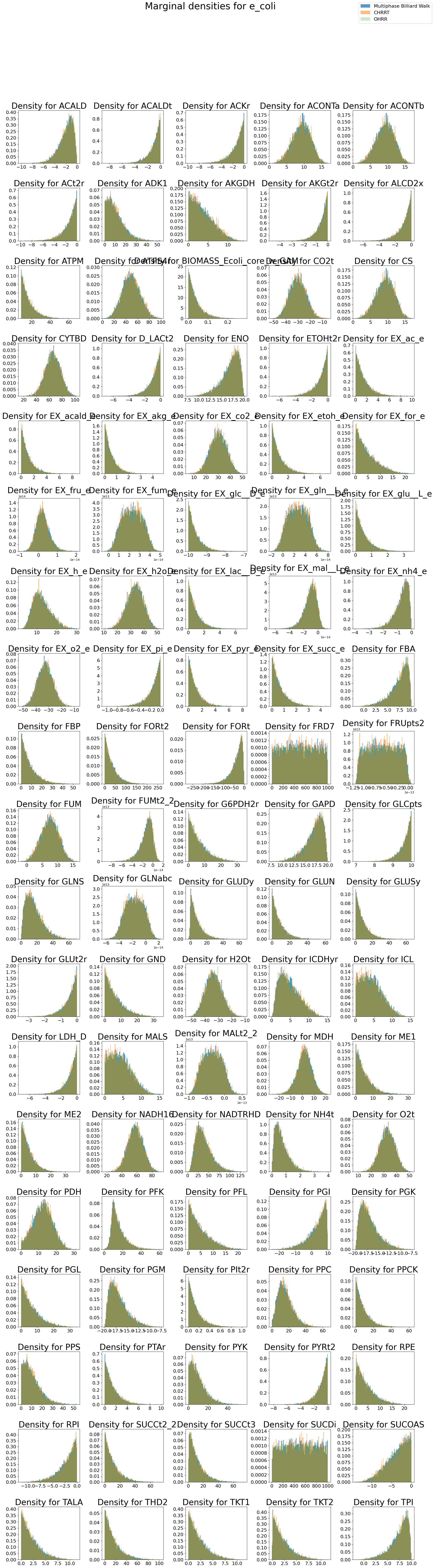

[11]:

for problem_selection in samples.keys():

n_dims = samples[problem_selection]["CHRRT"].shape[2]

n_cols = 5

n_rows = int(n_dims / n_cols) + 1

plt.figure(figsize=(n_cols * 4, n_rows * 4))

plt.subplot(n_rows, n_cols, 1)

plt.suptitle(

"Marginal densities for " + problem_selection, y=1.0, fontsize=title_fs

)

for dim in range(n_dims):

plt.subplot(n_rows, n_cols, dim + 1)

plt.title(f"Density for {parameter_names[problem_selection][dim]}", fontsize=title_fs - 4)

_, bins, _ = plt.hist(

np.concatenate(samples[problem_selection]["Billiard walk"], axis=0)[:, dim],

bins=100,

density=True,

label="Multiphase Billiard Walk" if dim == 0 else None,

alpha=0.75,

color="C0",

)

_ = plt.hist(

np.concatenate(samples[problem_selection]["CHRRT"], axis=0)[:, dim],

bins=bins,

alpha=0.5,

density=True,

label="CHRRT" if dim == 0 else None,

color="C1",

)

_ = plt.hist(

np.concatenate(samples[problem_selection]["OHRR"], axis=0)[:, dim],

bins=bins,

alpha=0.25,

density=True,

label="OHRR" if dim == 0 else None,

color="C2",

)

plt.xticks(fontsize=tick_fs)

plt.yticks(fontsize=tick_fs)

plt.figlegend(fontsize=legend_fs)

plt.tight_layout(rect=[0, 0.03, 1, 0.95])

plt.show()